I trust you not—or How to build trust with AI products

Trust me, I’m (not) a robot.

Trust is one of the most fragile and fundamental basis of any interaction. Since prehistoric ages, humans had to decide on whether the place they are in is safe to stay in or not, if the strangers they meet on their way are safe to be around or not, and if the berries they were given by others were safe to eat or not.

In psychology, trust is the willingness to rely on someone or something despite uncertainty. In tech, trust means believing that the system is competent, predictable, aligned with your goals, and transparent about limitations.

When you use a calculator, you expect 2+2 to equal 4. Every single time. That’s deterministic software. That’s the kind of trust we’ve built with traditional tools over decades — press a button, get a predictable result.

AI doesn’t work that way.

AI products deal in probabilities, not certainties.

With AI systems that are ambiguous and often operate like black boxes, trust becomes one of the biggest challenges.

We’re aiming for more nuanced, calibrated trust.

Calibrated trust means users know when to rely on the AI and when to apply their own judgment. (source)

How do we establish trust? How much should a user trust the system? When too much trust is harmful for both? Let’s dive in!

1. Let users test AI before committing

Even before a person starts using a system, they start the judgement. Just the same way, when we meet a stranger, we start assessing them by the way they are dressed, how they move, and speak to others.

With AI systems, we can show the capacity of the product using the sample prompts and previous similar projects. This would allow the user to build trust even before they start engaging with the software.

As a result of simulation running for a few seconds in Manus, a user can take the system for a test drive, assess the process, and preview the result without investing a lot of effort.

As users start working on setting the tasks or detailing prompts, a small demo preview of the results at monday.com allows them to build confidence with the system prior to running the task fully and paying credits for it.

This incremental exposure lets users validate the AI’s outputs gradually and start building trust, especially if the tasks are multi-step and require significant time and processing to deliver the results.

2. Match AI responses to user goals

Misaligned depth can erode trust. Too shallow answer may lead to the user feeling the AI being dismissive or uninformed. Too deep answer may leave the user overwhelmed, confused, and feeling like the system doesn’t “get” them.

Matching depth to the user’s goal and readiness signals competence and reliability, all of which are core to building trust.

Defining the depth of the answer lets the AI adapt to the user’s goal and mental load. Not every interaction needs the same level of detail. Some users just need a quick answer to confirm a fact or make a fast decision. For them, a shallow, concise response is perfect, it gives clarity without extra effort.

Others might be exploring complex topics, making strategic choices, or learning something new. They need context, reasoning, and nuance. For these users, deeper answers should provide insight, examples, and alternative perspectives, helping them fully understand the situation.

Also, users avoid frustration from answers that are either too shallow to be useful or too verbose to digest.

Depth control helps the system to provide guidance that is useful, manageable, and relevant.

When we understand what users value, what they need, and what limitations they face, we can tailor the experience and the final output to align with those parameters.

This proactive approach reduces misunderstandings, minimizes iterations, and ensures that the final solution genuinely meets user expectations.

3. Show your work: transparency builds confidence

Trust grows when people can see why a decision is suggested. Systems that provide transparency, like showing which data influenced a recommendation or highlighting reasoning steps, allow users to follow the AI’s thought process.

Even if the AI is occasionally wrong, users feel safer because they understand the reasoning behind its suggestions. (source)

When Elicit and Perplexity are working on complex tasks, they show their reasoning steps. Users can see it breaking down your request, considering different sources, and building toward an answer.

Users see the work being done, so the answer feels earned rather than pulled from thin air.

Aside from that, it’s way easier to be patient when you can see progress happening versus wondering if anything is happening at all.

Gemini Deep Research takes us on a journey of research together with a step-by-step system.

Users see how the research report is being constructed in real time, and the process feels collaborative instead of instructional. Users can edit the plan and adjust the process.

Even more, it feels like the product is having a conversation with the user like a human. “My”, “I”, “me” make the AI system feel like it’s a human companion, not a senseless machine performing the search based on the query.

In the chat, the user can see the minimal version of the progress, including a brief mention of the sources to prevent cognitive load for these users who do not want to dive into the details:

For complex tasks, building trust means much more than just being transparent about the steps taken, but also about having control over the process.

One example of this principle in action is Replit. The system shows what it is doing as it goes and allows the user to explore and intervene. The user can follow this process like a stream of updates or a log: setting up files, writing functions, or installing dependencies. At any point, the user can open a part of the project, read through it, and edit it.

Contextual action buttons reduce the cognitive burden of figuring out how to proceed next. This transforms the interaction from recall-based to recognition-based, making the interface more accessible to users who struggle with open-ended prompting.

4. Let users verify sources

When Perplexity mentions a statistic or claim, upon clicking on the citation, users are taken directly to the relevant section of the source document.

This approach builds trust because users can verify information instantly.

Microsoft Copilot is building trust in the answer by referencing the exact piece of content that was used to generate this piece of text and short summary:

Another way to increase trust in the output is to focus on how the information is structured and presented.

Simple text alone may not be enough to communicate context, relationships, or levels of certainty. In more complex cases, the output becomes stronger when paired with visual structure such as charts, interactive visualisations, or source mapping.

This transparency creates confidence because the reasoning is visible, structured, and verifiable.

Reasoning that is laid out visually feels more honest, nothing is hidden. Strong visual structure turns complexity into clarity and clarity helps to build trust. (source)

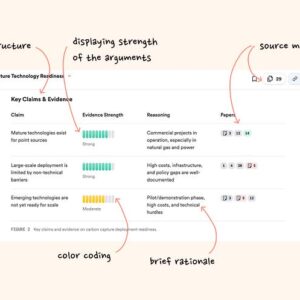

Consensus app allows users to visually scan the results of the search, quickly assess the answers and strength of the arguments mentioned in the output.

Confidence grows when the system says “evidence strength: moderate” or offers multiple interpretations rather than forcing a single answer.

5. Enable collaborative edits

The trip planner Layla is a fully interactive AI-based system allowing users to preview the locations, visually scan the itinerary, and change the components of the trip, for example, change the hotels.

Trip management is happening within the main interface along with the chatbot experience, assisting the user like a human travel planner would. If a user wants to make global amends to the entire trip, for example, make it cheaper or remove flights, they can do so using the chatbot.

Users can choose one of the contextually relevant options, for example, in an Amalfi trip, they can skip the boat tour while making the trip cheaper by choosing cheaper hotels.

Users modify plans with map elements, visually updating in real-time. This full process and result transparency make the process feel trustworthy.

In Reve AI, as the user makes changes, the system shows context-aware suggestions and recommends next steps based directly on what was just created.

Trust grows because users can clearly see how and why the system is making suggestions, rather than receiving random results. The reasoning is aligned with what the user is trying to achieve at the moment.

Speed increases because the system anticipates needs and keeps momentum, reducing friction and shortening the path from idea to execution.

Transparency combined with guidance becomes a basis for efficiency and credibility.

Wrap up

The future of human–AI interaction will depend on calibrated trust — a balance between confidence and skepticism.

When users can see how decisions are made, explore results visually, intervene in the process, and verify sources instantly, they shift from passive recipients to informed collaborators.

Good luck! ☘️

👋 My name is Mary, I am a product designer and writer. If you are after more articles like this, subscribe to my Substack newsletter and connect with me on LinkedIn.

I trust you not-or How to build trust with AI products was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.