When systems act on their own, experience design is about balancing agency — not just user flow

For years, UX teams have relied on the user journey map, a standard tool of design thinking, to visualize and communicate user intent, behavior, and flow. We’ve diagrammed human goals and emotions across interface steps, tracing the path from discovery to conversion or task completion. These maps assume a mostly linear progression: a series of visible screens, structured tasks, and deliberate choices made by the user.

But in AI-powered systems, those assumptions start to unravel. Steps become invisible. Goals evolve mid-stream. The system no longer waits for a command — it infers, proposes, even acts. As AI takes on more responsibility, the familiar architecture of user flows breaks down. What we once called a “journey” begins to look more like a dialogue or, more accurately, a negotiation.

Traditional journey maps assume a fixed progression — step-by-step tasks, static screens, and clear user intent. But in experiences powered by AI like ChatGPT, Google Gemini, or GitHub Copilot: agents take initiative and complete tasks, sometimes invisibly, and control passes back and forth. Consider AI actions where:

- A writing assistant finishes your sentence as you type.

- A design tool applies changes based on loosely defined instructions.

- A browser assistant summarizes web pages, suggests next steps, and acts across domains.

In each case, the system participates in meaning-making and decision-making. These experiences are a loop of mutual influence. Designing for this new reality requires new frameworks — UX models that go beyond step-by-step user task completion and instead account for the dynamic, shared control between human and machine.

As AI systems grow more capable, our job as experience designers changes. It’s not just about flows or components or polish. It means designing for principles of control-aware UX:

- Intent scaffolding: How do I suggest actions? Help users frame ambiguous goals.

- Clarity and preview AI plans: What is the AI doing? Why? Show what the system will do before it acts

- Steerability: Can I change the path? Let users adjust AI behavior mid-task.

- Reversibility: Can I undo what just happened? Provide clear undo and override options.

- Transparency and alignment: Will this system respect my time, my goals, my oversight? Share system reasoning.

This is about shaping the relationship between a person and a system that can now act on its own. That relationship only works when it’s legible, steerable, and grounded in human-centered outcomes.

Managing the balance of control

In his presentation, “Software Is Changing (Again)” at Y Combinator’s AI Startup School, Andrej Karpathy, former head of AI at Tesla and one of the most influential voices in applied AI, described this shift in software design as the transition from deterministic, code-driven systems to a new paradigm: one where the interface is natural language, and the program is the prompt itself.

As Karpathy puts it, “Your prompts are now programs that program the LLM.” But unlike a command or form input, a prompt initiates a probabilistic, interpretive process where the model infers intent and context rather than executing a fixed action.

Karpathy presents the idea of an autonomy slider — a spectrum of interaction that spans from full user control on one end and full AI autonomy on the other. It’s not a toggle. It’s a dynamic, fluid scale that shifts throughout a session. Sometimes the user leads. Sometimes the AI model proposes or acts. Often, they alternate roles repeatedly. A control trade off, moment by moment.

Karpathy illustrates two fundamental modes of interaction between humans and AI:

1. Human-as-Driver (instruction mode)

- The human gives detailed, explicit commands

- The AI model executes based on those instructions

- Think: prompt engineering, form fills, manual configuration

- UX focus: input clarity and scaffolding, structured guidance

2. Model-as-Driver (autonomy mode)

- The human gives a high-level goal

- The model plans, decides, iterates, and selects

- Think: “Write a draft brief,” “Build me an app,” “What am I missing?”

- UX focus: explainability, oversight and override control, trust signals

These two modes exist in dynamic tension, not as a binary. Users and AI agents fluidly shift control back and forth. Karpathy’s framing shows us that the real UX challenge is not conversation vs. interface — it’s designing for co-agency.

You don’t just want to talk to the operating system [LLM] in text. Text is very hard to read, interpret, understand…a GUI allows a human to audit the work of these fallible systems and to go faster. — Andrej Karpathy, “Software Is Changing (Again)”

After watching Karpathy’s talk, I kept thinking about the “autonomy slider” — the idea that users choose how much control to hand over to an AI. But that isn’t always about full autonomy, like AI writing code and submitting pull requests. Sometimes, it’s about something more subtle: how much room you give the system to interpret your intent.

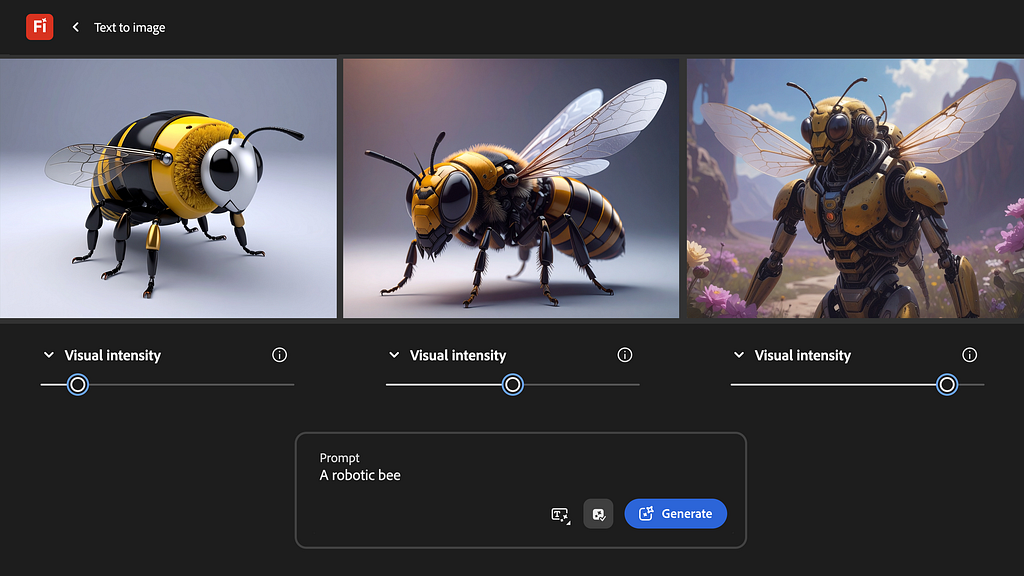

I’ve been using Adobe Firefly since its beta, mostly for generating visual concepts and exploring stylistic directions. And in Firefly, slider controls aren’t hypothetical — they are built into the interface.

Firefly gives me tools to modulate control, not just submit input. The prompt field isn’t the whole interface. It’s just one part of a larger control surface. Around it are sliders — like Visual Intensity and Style Strength — that act as real-time dials for how much agency I’m giving to the model. When I lower the intensity, I’m signaling: stay close to my prompt, stay literal. When I turn it up, I’m inviting interpretation, letting the model take creative liberties.

In effect, I’m choosing how much autonomy to give the AI. This is about shaping the balance of authorship. The system becomes a collaborator, and the sliders become a visible, tactile way of managing our partnership.

UX is changing (again), too

The future of interaction isn’t just about guiding users smoothly from point A to B. It’s about designing relationships between people and models. That shift demands a different kind of UX thinking, one that treats human–AI control states as a core design dimension, not an edge case. And this change hasn’t gone unnoticed. UX leaders across the industry are proposing new human-centered methods for designing with and around intelligent systems.

In the UX Matters article “Beyond the Hype: Getting Real with Human-Centered AI”, Ken Olewiler, Co-Founder of the design accelerator Punchcut, offers a sharp critique of the current AI landscape, cautioning against the rush to adopt generative AI without grounding in real user value. While many organizations are experimenting, he notes that few have moved beyond proof-of-concept into measurable ROI — a gap he attributes to hype-driven decision-making and a lack of human-centered framing.

Avoid attempting to integrate AI automation fully at every stage of the customer lifecycle. Be more selective, integrating AI where it will add the most value. Offer AI features that enable cooperative user control, preserving a meaningful sense of agency for users. — Ken Olewiler, Co-Founder, Punchcut

Olewiler calls for a rethinking of autonomy. While the dominant narrative around AI tends to celebrate full automation, his team’s user research has found that users prefer shared control. He recommends creating autonomy maps that visualize where human and machine agency shift across the experience — echoing systems design tools like service blueprints, but focused on control. These maps help teams design for co-agency, rather than handoffs or black-box automation.

Matt Scharpnick’s from BCG post, “UX Design for Generative AI: Balancing User Control and Automation”, echoes Olewiler’s emphasis on balancing user agency with AI automation, reinforcing the need for UX that treads the line between inspiration and precision.

Models can be frustrating to guide toward an exact result. We are still in the early days of GenAI, and there’s plenty of room for innovation — especially in designing interfaces that let users dial in their exact preferences. — Matt Scharpnick, Associate Director, BCG

Scharpnick argues that the future of generative UX lies in designing interfaces that let users “dial in” precisely where they want value and control, without stifling the generative model’s creative potential. This mirrors Olewiler’s call for autonomy maps and shared agency, highlighting a broader UX shift: we must design systems that both spark amazement and support professional-level accuracy.

Mapping control flows: what we can learn from OESDs

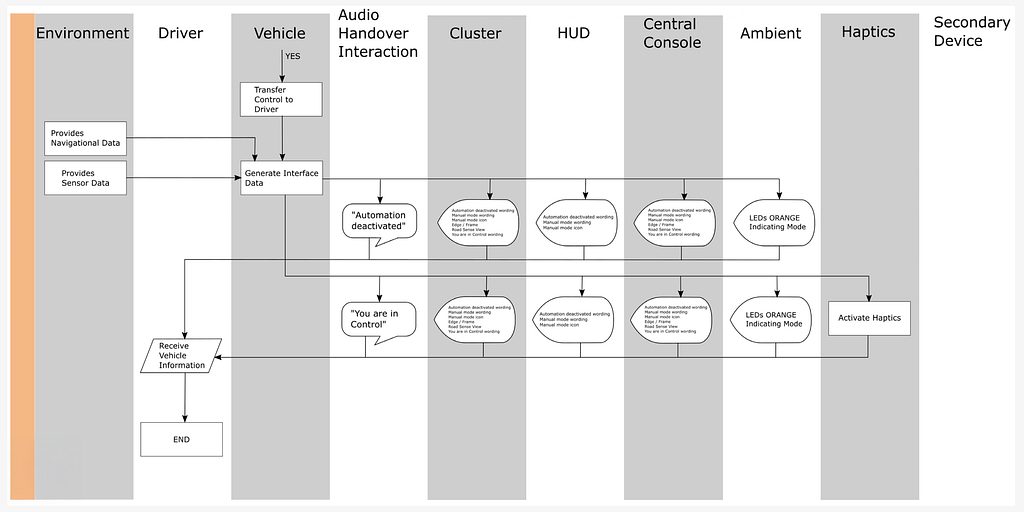

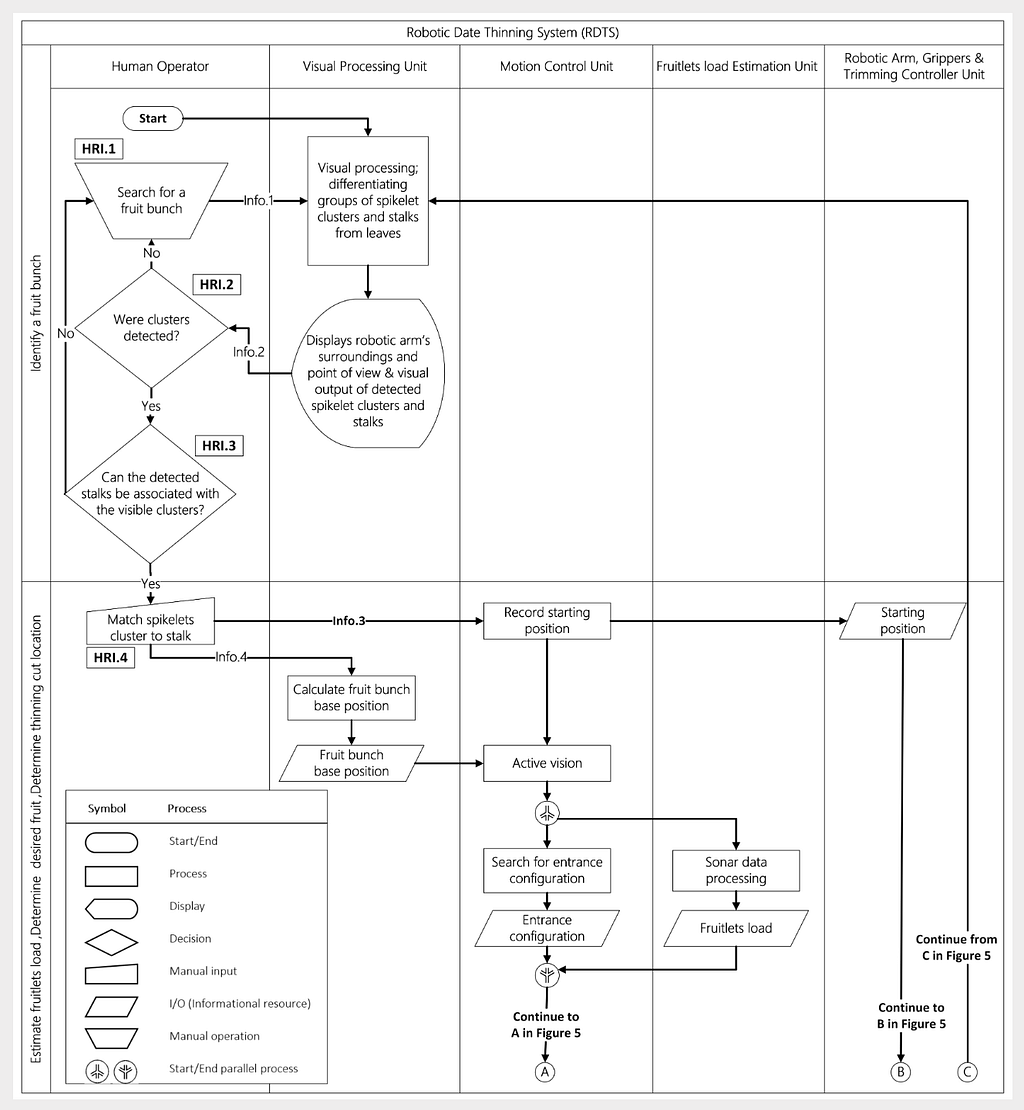

Operator Event Sequence Diagrams (OESDs) are formal models used to visualize how control shifts between human operator(s) and automated systems over time. Developed in safety-critical fields like aerospace, autonomous vehicles, and industrial robotics, OESDs help engineers define who is responsible for each action, what triggers a control handoff, and how systems respond when things go wrong.

These diagrams typically include two or more “swim lanes” — one for the human, one for the machine — and map out sequences of actions, decisions, and handover points. They’re designed not just to capture behavior, but to enforce clear accountability and recoverability in complex, high-risk environments.

OESD examples:

In remote operation of self-driving cars, researchers have used OESDs to model when a remote human should step in — from passive monitoring to active driving — depending on the vehicle’s confidence and context.

In agricultural robotics, OESDs help researchers choreograph shared control tasks, like having a human mark which fruit to harvest while the robot handles the physical cutting.

These models give us a language for describing control as a temporal sequence between human and system. They’ve proven effective in domains where clear authority and fail-safes are essential. As UX designers, we can evolve control sequence diagrams as we face our new challenges: designing not just for control handoffs, but for fluid co-agency between people and AI systems.

From journey maps to control maps

AI systems are transforming the structure of digital interaction. Where traditional software waited for user input, modern AI tools infer, suggest, and act. This creates a fundamental shift in how control moves through a experience or product — and challenges many of the assumptions embedded in contemporary UX methods.

The question is no longer:

“What is the user trying to do?”

The more relevant question is:

“Who is in control at this moment, and how does that shift?”

Designers need better ways to track how control is initiated, shared, and handed back — focusing not just on what users see or do, but on how agency is negotiated between human and system in real time.

We can adapt OESD-like thinking to broader user experiences with AI systems. User-AI control mapping may be less about static diagrams, and more about a mindset — designing for systems that act, but still listen. Key considerations:

- Who is in control — user(s), AI(s), or both

- When and why control shifts — as moments or loops

- How the interface supports those transitions — affordances needed to follow principles of control-aware UX

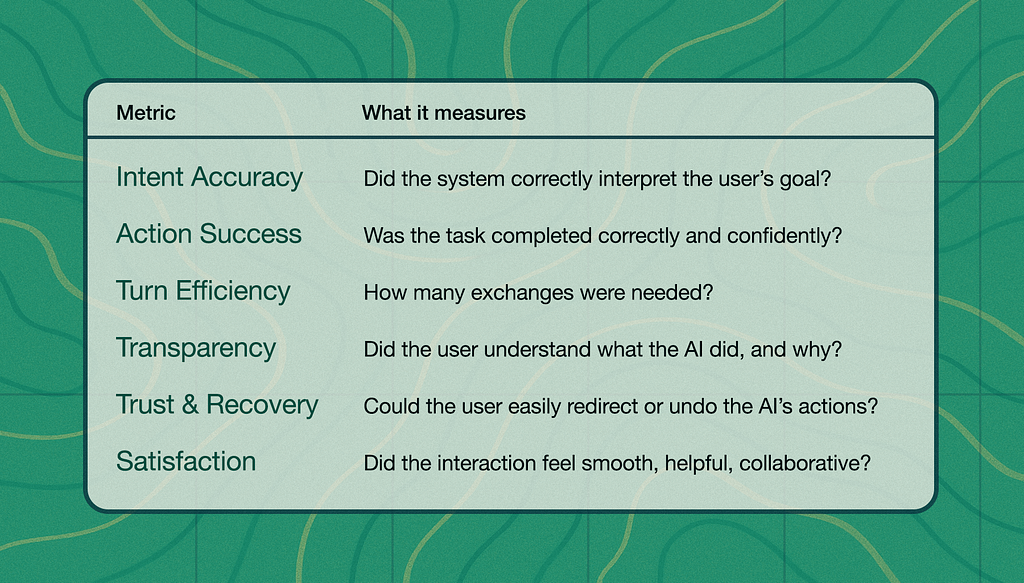

To evaluate whether these dynamics are working, we need new metrics — ones that capture the quality of collaboration, not just completion.

Quality metrics like turn efficiency and intent accuracy aren’t just diagnostics — they’re a way to operationalize trust, alignment, and control in AI-powered design. The goal is clarity, adaptability, and human-centered outcomes, even when control shifts moment to moment.

Ambient AI systems raise the stakes

Ambient Intelligence (AmI) describes environments equipped with embedded sensors that proactively and unobtrusively support users — adapting to context, recognizing behavior patterns, and anticipating needs without explicit commands. This vision spans from smart rooms adjusting lighting to voice assistants that understand spoken cues — and now, to Ambient AI services embedded in our digital products.

- Reading context without prompt

- Proposing actions instead of waiting

- Acting autonomously, then receding

Ambient AI introduces a new frontier for digital experience design.

The shift toward ambient AI was on display at Google I/O 2025 in the form of Project Astra. The project’s multimodal assistant was introduced embedded in a smartphone equipped with camera and audio input. Google’s AI assistant will perceive the environment, recognize objects, and interpret spoken language — all in real time. It represents a step toward the future of context-aware, embodied AI: systems that don’t wait for a prompt, but actively observe, interpret, and assist — embedded in the spaces and tools we already use.

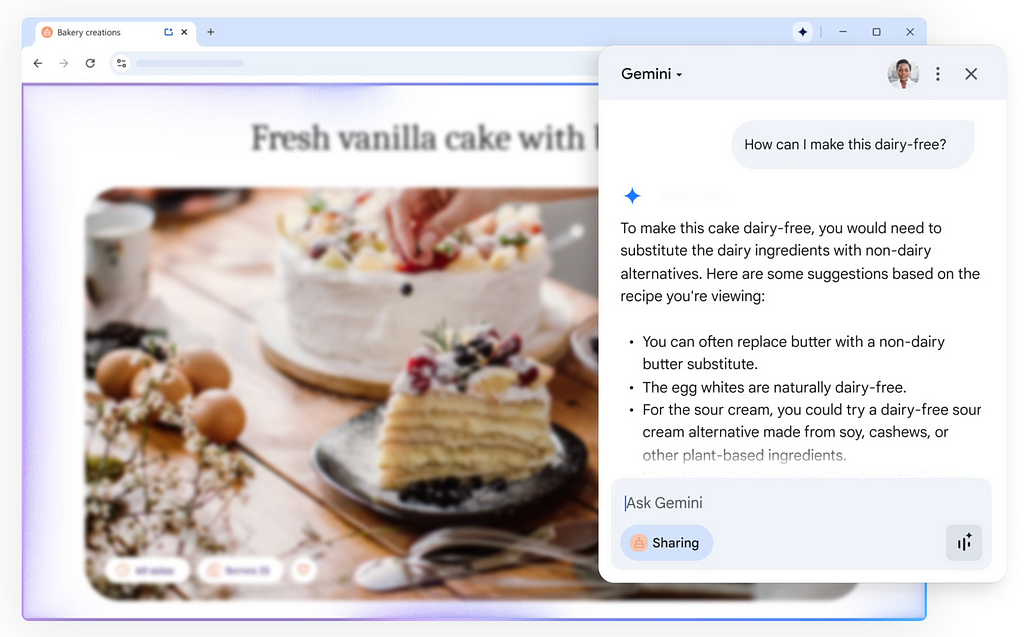

Notably, Google announced Gemini will be integrated directly into Chrome, where Google is moving past AI as a discrete feature and toward Gemini as an omnipresent layer. In this sense, the browser, once a passive container for websites, becomes an active collaborator: reading context, suggesting actions, and interpreting tasks across domains. The AI assistant doesn’t wait to be opened — it’s simply there, aware of the user’s real-time context and ready to participate.

Google’s position is increasingly clear: own the cross-domain AI layer. This isn’t a niche edge case — it’s a mainstream competitive dynamic, because Chrome is not just any browser. It is the default interface for users globally, controlling more than 60% of the browser market. Its advantage — the “moat” — is not just model performance, but context continuity across tabs, apps, and sessions. This evolution to the browser becoming conversational raises a set of urgent questions for product and UX teams building AI-powered assistants inside their own platforms. If Gemini is already ambient within Chrome, what role remains for domain-specific assistants? Will users prefer site-embedded AI agents tailored to a single brand or task? Or will the dominant web pattern become building for Gemini — ensuring compatibility, clarity, and trust with the AI already riding alongside the user at browser level?

This isn’t just a new interaction model — it’s a new presence model, where an AI system occupies the full surface of the user’s digital environment. This evolution reframes not just interaction patterns, but the expectations we bring to digital products. It reopens foundational questions that echo far beyond technical UX. And it’s here that voices like Jony Ive’s enter the conversation.

At Stripe Sessions 2025, Jony Ive sat down for a rare conversation on the craft of design and our responsibility as builders. He emphasized that great products aren’t built on novelty or cleverness alone — they emerge from deep care, clarity of intent, and restraint. Ive emphasizes our responsibility to create technology that doesn’t demand more attention, but gives some of it back. He called for products that recognize that users “have this ability to sense care” — an ethos that resonates with designing AI not as a productivity hack, but as a new kind of collaborator.

https://medium.com/media/d9d0db458e0ed3fdcc53a5414a1a3e63/href

The work ahead: designing AI systems with care

In the world of AI-powered experiences, users and models co-author outcomes. As Jony Ive reminds us, we need trust and care at the foundation — not just on the surface. The success of those experiences will depend less on how fast or clever the system is, and more on how well it shares control. As AI tools evolve, control design becomes trust design.

Trust is not earned by hiding complexity. It’s built by making complexity navigable. Systems that act must also explain. Systems that propose must invite critique. Systems that remember must disclose what they remember and why.

Designing for dynamic control means treating the interface not as a tool, but as a relationship. And relationships require clarity, responsiveness, and the ability to say no. The systems we build will increasingly act on our behalf. The question is whether we’ve designed those systems to listen as carefully as they act.

Beyond journey maps: designing for control in AI UX was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.