Who’s spotting you when you automate

Designing for humans in high-pressure environments.

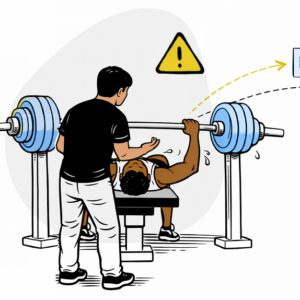

A good spotter reduces your fear of a catastrophe. They’ve positioned themselves close enough to you, don’t interfere with your lift, and you know they’re watching to step in if needed. This certainty allows you to feel safe. But when you don’t trust your spotter? You pull back because the risk feels too high. It’s no secret that teams wrestle with trust, governance & cross-boundary automation across the industry today.

What needs to happen when context awareness shifts from humans to systems?

Automation doesn’t remove responsibility; it relocates it

The benefits of using automation aren’t operational alone; they reshape how teams make decisions as their organization and systems mature. Automation spots trouble 30-40% faster and resolves it, enabling engineers to relocate responsibility with greater confidence. One enterprise study highlights that machine learning-based anomaly detection improved diagnostic accuracy by 25% (HGBR, Oct 2025). That’s a meaningful leap given how challenging root-cause analysis is in distributed environments.

When a deployment pipeline behaves in unexpected ways, fear is the most natural human response. Naturally, questions emerge: Will drift be caught in time? Will rollback behave as expected? Who is notified if something goes wrong? These questions reveal deep issues around accountability, governance, and failure boundaries.

Engineers rely on mental models to anticipate how systems behave under pressure, but when automation intervenes too early or too late, the models fracture, and psychological safety diminishes. It’s the same feeling as lifting with a spotter who doesn’t know your patterns. They might intervene too late, too early, or at the wrong moment. In automation, the same dynamic surfaces. When mental models diverge, engineers default to manual control, increasing operational effort. This is a common flaw when transparency hasn’t been established.

Human-Machine teaming research studies consistently report that trust collapses when error boundaries aren’t clear. When automation oversteps human context, or when its error conditions misalign with team mental models, it breaks the contract that makes delegation trustworthy, leading to poor decision-making (Bansal et al. 2019). Context is one of the largest components that fosters trust.

Psychological safety in automation requires boundaries. Effective automation communicates what it can and cannot do with accountability structures that mirror how teams operate in the real world. When automation encounters unfamiliar or ambiguous conditions, engineers need immediate clarity: Who gets notified, what rollback options exist, and how do we escalate this issue? If the answers integrate with existing governance practices and team workflows, a partnership with automation forms.

Within mature IT Service Management (ITSM) practices, approval gates act as intentional pauses activated by risk, ensuring safety is supported by judgement that can shift between automation and human decision making. The experience is designed to avoid the reduction of constraints to ‘yes’ or ‘no’ buttons under immense pressure.

Trust develops over time as automation aligns with organizational and infrastructure patterns as well as team behaviors. As automation demonstrates reliable behavior within learned boundaries, it earns human trust the same way a great spotter does, by observing and responding in a predictive way across repeated interactions.

Temporal awareness UX builds the relationship

Great automation will not create blocks within your ITSM approval gate with no explanation. Conditional approvals require temporal awareness UX where past incidents inform thresholds, present status signals that establish confidence, and the potential future risk can determine escalation.

There are novice spotters and good spotters, but a great spotter understands your environment and anticipates your patterns through temporal awareness. They’ve watched enough of your sets to notice your patterns. Any spotter can actively watch your bar in real-time, great spotters notice drifting to the right, see your struggle on rep 3, and give you feedback after your set.

A commonly overlooked pillar of automation is time.

Temporal awareness UX is the practice of creating experiences that provide transparency into system states across a timeline.

Automation shares the same principles, requiring visibility across three dimensions in time:

Historical traces, organization policies, logs and telemetry (Past): Like coaching and giving feedback after a set, engineers need data to understand what happened and why. Without this, confidence in automation becomes harder to build, and debugging becomes guesswork.

Real-time status and observability (Present, “Rep 2”): Like the spotter watching the bar in real time, tools must observe system behavior as it takes place, ready to prevent or alert on imminent failures.

Trend analysis and prediction (Future): The elbow flare on Rep 3 anticipates trouble on Rep 5, systems with great visibility detect drift and degradation, preventing chaos before it becomes an incident.

Lacking these temporal contexts, approval gates (for example) default to rules that are in stone, and binary decision options; hindering trust instead of reinforcing it.

Boundaries and time foster trust

Boundaries, not gates, drive efficiency by creating a support system when pressure peaks. Automation adoption succeeds or fails based on two dimensions:

– Psychological safety, requiring clear boundaries. Like a trusted spotter, automation must communicate what it will or won’t do, when it’ll intervene, and how teams can escalate when something goes wrong.

– Temporal awareness, requiring visibility across time. Engineers need to understand what happened (learn from incidents), what’s happening (for awareness), and what will happen (for preventing failures).

Without these interdependent dimensions, teams operate blindly, unable to trust systems they can’t predict or observe. Safe automation requires a spotter who remembers, observes, and anticipates.

Good automation is functional. Great automation employs these spotter behaviors by design, making safety and timing intelligible for humans, helping teams push their limits, knowing their partner is watching.

Over the past decade, I’ve focused on enhancing the developer product experience within multi-disciplinary teams. For the past 5+ years, I’ve led design for DevOps workflows, IaC adoption, incident management, and observability within socio-technical environments.

References:

* Reducing Mean Time to Repair (MTTR) with AIOps: An Advanced Approach to IT Operations Management https://www.researchgate.net/publication/395524114_Reducing_Mean_Time_to_Repair_MTTR_with_AIOps_An_Advanced_Approach_to_IT_Operations_Management (Sept, Aug 2025)

** AIOps for Enterprise Integration: Evaluating ML-Based Anomaly Detection and Auto-Remediation on SLAs and Cost https://hgbr.org/research_articles/aiops-for-enterprise-integration-evaluating-ml-based-anomaly-detection-and-auto-remediation-on-slas-and-cost (Oct 2025)

Platform Engineering: From Theory to Practice, Liz Fong-Jones & Lesley Cordero (GOTO Conference Series): https://www.youtube.com/watch?v=wVi7pNRT0Xk, GoTopia (June 2025)

Modeling trust and its dynamics from physiological signals and embedded measures for operational human-autonomy teaming https://www.frontiersin.org/journals/robotics-and-ai/articles/10.3389/frobt.2025.1624777/full (Oct 2025)

Superagency in the workplace: Empowering people to unlock AI’s full potential https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work (Jan 2025)

Beyond Accuracy: The Role of Mental Models in Human-AI Team Performance, https://www.researchgate.net/publication/339697298_Beyond_Accuracy_The_Role_of_Mental_Models_in_Human-AI_Team_Performance (Oct 2019)

Who’s Spotting You When You Automate was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.