Opinion

We are confusing motion with progress. In physics, this mistake has a name: speed without direction. In civilisation, it has a cost.

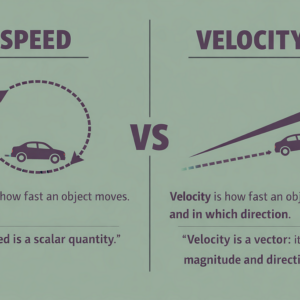

Here’s a truth from physics that should concern every executive racing toward AI dominance: speed tells you how fast you’re moving. Velocity tells you how fast you’re moving — and in which direction.

A car travelling at 100 mph in circles has tremendous speed but zero velocity. It goes nowhere. It only burns fuel, overheats the engine, and eventually becomes a hazard to everything nearby.

As Stephen Covey put it more bluntly: “Speed is irrelevant if you are going in the wrong direction.”

Sam Altman reportedly issued an internal “code red” at OpenAI in December 2025 — an emergency directive to focus resources on improving ChatGPT whilst delaying initiatives like advertising or Pulse triggered by Google’s launch of Gemini 3. The flagship product that triggered the current AI frenzy, deployed to hundreds of millions of users, needed an internal alarm bell. Not because it had fundamentally failed. But because in the race to stay ahead, the product itself required urgent consolidation before it could bear the weight of continued competition.

This isn’t an isolated corporate moment. It’s the logical endpoint of what researchers Houser and Raymond identified in their 2021 analysis: an AI race that “lacks definition, a goal, or even an aspirational dream” — yet we keep selling the emotional story of “winning” without ever crossing a finish line.

Motion is not progress

We measure “leadership” in the AI race through release dates, funding rounds, model sizes, benchmark wins. These are metrics of motion, not progress. They tell us who is accelerating — not who is steering.

Lewis Carroll understood the danger: “If you don’t know where you are going, any road will get you there.”

And when steering disappears, two failures cascade: products become the strategy — you ship because you can, not because you should.

And users become raw material — queries as data, confusion as engagement, vulnerability as conversion.

We’ve seen this pattern before. Facebook’s “move fast and break things” contributed to outcomes such as Cambridge Analytica, the amplification of ethnic violence in Myanmar, algorithmic radicalisation. The company changed its motto — but only after harming democracies. That’s speed without velocity: motion that creates value for shareholders whilst externalising catastrophic harm onto societies.

The advertising rumours around OpenAI matter not as scandal, but as signal. When a product loses strategic clarity, monetisation stops being a consequence of direction and becomes a substitute for it. This is profitable aimlessness.

The speed trap

The real divide between the United States and Europe is no longer “regulation versus innovation.” It is speed versus velocity.

America has chosen speed. Deploy faster, scale bigger, monetise quicker. Ask forgiveness later — if anyone is still empowered to ask. This is innovation as extraction: build, break, capture, repeat. Humans become datasets. Long-term damage becomes acceptable externality. Consent becomes a legal checkbox rather than an ethical foundation.

Europe has chosen velocity. Not mere movement, but movement towards something: a society where rights don’t evaporate when a model improves, where consent is not a checkbox, where accountability has a name, a process, and an enforcement mechanism.

Europe isn’t slow. Europe is choosing direction.

Critics argue — rightly so — that European regulation has slowed deployment. That whilst Brussels debated AI Act provisions, Silicon Valley shipped products to hundreds of millions of users. That market leadership matters, and hesitation costs.

True. But incomplete.

Because the question isn’t “who shipped first?” It’s “who shipped something that can scale without collapsing and who can articulate why it needed to exist at all?”

Speed means nothing if you cannot answer what problem you’re solving.

The OpenAI code red memo is an existence proof: shipping fast doesn’t guarantee shipping well. And when trust collapses, market leadership becomes a liability, not an asset.

The GDPR didn’t slow European innovation — it prevented European companies from building on foundations of exploited data that would later crumble.

The AI Act isn’t delaying deployment — it’s preventing deployment of systems that would later require expensive retrofitting or complete abandonment.

Europe isn’t slow. Europe is avoiding the expensive mistake of building systems that cannot scale with trust.

Guardrails are compasses

The European regulatory framework is doing something more sophisticated than restriction. It is building infrastructure.

GDPR doesn’t prohibit data usage — it demands transparency: what you collect, why you collect it, how long you keep it, who you share it with, and what rights people retain. That is not restriction. That is direction.

The AI Act doesn’t ban artificial intelligence — it categorises AI systems by risk level and mandates transparency, human oversight, and accountability proportional to potential harm. High-risk systems affecting employment, education, healthcare, law enforcement must meet minimum standards before deployment. This is infrastructure for trust.

The Data Act ensures that data generated by connected devices remains accessible to users and can be shared across services, preventing monopolistic data hoarding. It creates foundations for competitive markets and genuine user agency.

But the real power of European regulation lies not in what it prohibits, but in what it insists upon:

1. Accountability means someone can answer when things go wrong. Not “the algorithm decided.” Not “the model optimised for engagement.” But a named human being who can explain the logic, justify the outcome, and bear responsibility for the consequences.

2. Transparency means systems can be understood. Not just by engineers who built them, but by citizens affected by them. If we cannot understand how decisions are made, we cannot contest them. And if we cannot contest them, we have abdicated governance to machines that cannot be held responsible.

3. Responsibility means consequences attach to choices. When an AI system fails, when it discriminates, when it causes harm — there is a legal pathway for redress. This is the foundation that allows innovation to scale with trust rather than collapse under its own externalities.

Consider Estonia’s digital governance framework. Every government algorithm that touches citizen data requires human approval, logging, and audit trails. The result? One of the world’s most digitalised societies — and one of the most trusted. Speed of deployment matched by clarity of accountability.

Or think about the Netherlands’ court ruling against the government’s welfare fraud detection algorithm. The system was fast, efficient, and… discriminatory. The court demanded transparency and accountability. The ruling didn’t stop innovation. It redirected it towards systems citizens could actually trust.

This is velocity: movement with direction, protected by accountability.

The race makes cooperation impossible

Here is the danger that few acknowledge: the race narrative makes global cooperation impossible.

Not difficult. Not unlikely. Structurally incompatible.

When nations frame AI development as existential competition, every shared standard becomes potential weakness. Every regulatory alignment becomes strategic vulnerability. Every moment of cooperation risks exploitation by adversaries who might be moving faster in the shadows.

As Houser and Raymond observed: an AI race can keep selling the emotional story of “winning” without ever crossing a finish line. The race narrative fractures the possibility of building AI systems grounded in shared values, mutual accountability, and collective benefit.

Without cooperation, we do not get faster innovation. We get incompatible systems, fragmented standards, and a technological landscape where human rights are jurisdiction-dependent accidents rather than universal guarantees. We get a world where the same AI system can respect privacy in Brussels, ignore it in San Francisco, and weaponise it in Beijing.

The future of AI depends on cooperation, infrastructure, and uniform standards — on shared definitions of harm, shared accountability mechanisms, shared ethical foundations. But velocity requires shared bearings. Speed doesn’t. Speed just requires a competitor.

This is not speculation. This is happening now. And the window for choosing differently is closing.

The architecture of endurance

The Renaissance was not built in haste. It was intentional. European cathedrals took generations to complete. Their builders knew they would not see the finished work. Yet they built with precision, with vision, with responsibility to those who would inherit what they created. Ideas were allowed to mature. Beauty was given time to emerge. Architecture was designed for endurance, not obsolescence.

Contrast this with structures built for speed: temporary, disposable, optimised for immediate utility. They served the moment. Then vanished, leaving nothing worth preserving.

We face this choice now. Build fast and disposable, or build to last. Optimise for quarterly returns, or generational impact. Compete for market share, or create foundations worth inheriting.

Europe remembers this lesson. America seems determined to forget it.

Choosing direction

If you are responsible for AI strategy, governance, or risk management, velocity requires three shifts:

First, reframe your metrics. Speed metrics — deployment velocity, time-to-market, model performance — must be balanced by direction metrics: clarity of purpose, user safety outcomes, accountability pathways. If you cannot measure direction, you cannot manage it.

Second, build accountability chains. Every AI system needs a named human who can explain, justify, and take responsibility for its decisions. If you cannot identify that person, you cannot deploy the system responsibly. These chains cannot be retrofitted — they must be designed from day one. There’s an old IBM memo from 1979 worth remembering: “A computer can never be held accountable. Therefore a computer must never make a management decision.”

Third, treat compliance as architecture. European regulations aren’t restrictions to work around — they’re scaffolding for building systems that can scale globally. If your system can meet GDPR and AI Act requirements, it can operate anywhere. If it cannot, you’re building for a fragmenting market.

The organisations that understand this now will have structural advantages when markets consolidate around trustworthy systems.

Beyond the finish line

The choice isn’t “Europe versus America.” That’s the race story trying to recruit you.

The choice is speed without accountability, or velocity with direction.

Europe’s bet: innovation scales only when trust scales with it. Real progress requires more than computational power — it demands wisdom.

America’s bet: trust can be retrofitted later. Speed creates its own legitimacy. Winning justifies the means.

The code red memo is what it looks like when “later” arrives.

Every algorithm, every neural network carries within it the echoes of human decision-making. Our challenge is not to fear these technologies, but to consciously shape their ethical foundations. Our deepest innovations emerge not from machines, but from the human capacity to reimagine possibility.

The question before us is whether we’ll exercise that capacity — or surrender it to the illusion that speed equals progress.

Author’s Note: The thinking, research, and arguments in this article are entirely human. AI reviewed form and structure only.

Velocity over speed: why the AI race has already failed was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.