The field of UX is undergoing a dizzying genAI takeover.

TLDR: This is the year that AI has taken over UX. In this article, I explain why and give an update on my method of using AI for UX research, focussing on how ChatGPT’s Deep Research functionality can enable a significant acceleration of qualitative research analysis.

(Note: I will use the terms ‘AI’, ‘genAI’ and ‘model’ as shorthand for ‘generative AI’, primarily referring to Large Language Models (LLMs), the specific type of generative AI that creates text.)

I published my original Medium piece on AI for UX ten months ago and since then it became a sleeper hit with thousands of views, far more than any other article in this blog so far. But ten months in the realm of generative AI is like ten dog years; the pace of change has rendered parts of that piece obsolete.

So, when I was contacted by my alma mater, the Department of Human-Computer Interaction Design at City St George’s, University of London, to speak at their annual conference, I jumped at the chance to renew my previous stake in this subject, especially once my old professor revealed that they contacted me partly because of the popularity of my Medium piece.

Here is my second take on AI for UX, updated for 2025 and encompassing my talk at the HCID 2025 conference (which went rather well, even if I say so myself).

It has happened. In 2025, AI has taken over UX

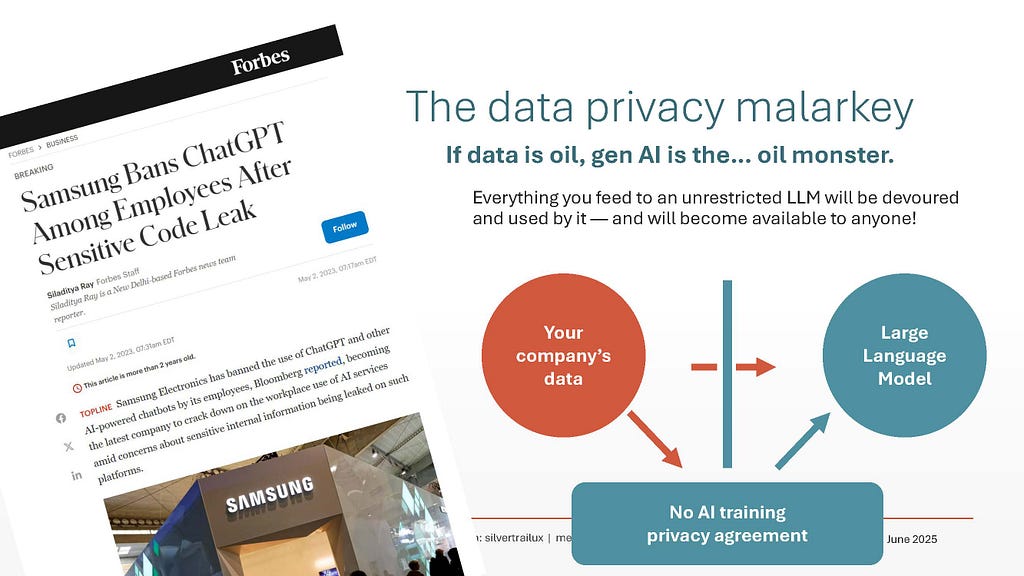

When I was starting up with AI in my work at the beginning of 2024, I felt like an intrepid explorer venturing into alien land. Sure, AI had been around for a while and most of us had fooled around with it at home to generate store cupboard recipes or visual slop. But few of us had the opportunity to let AI seriously reshape our work, largely due to the ‘AI-will-feed-on-anything-you-give-it’ data privacy problems and the resultant strict bans on using AI for work.

But 2024 was the dawn of private AI. Finally, providers like OpenAI and Anthropic ‘walled off’ their LLMs in order to make them data breach-free and in doing so, opened the gates for organisations such as mine to private AI on a subscription basis.

Roll on 2025, a Nielsen Norman Group study informs me that UX professionals are some of the heaviest AI users ever. A key statistic surfaced by this study reveals that an average UXer today is using LLMs a staggering 750 times more compared to other sample professions!

Why do UXers love AI so much?

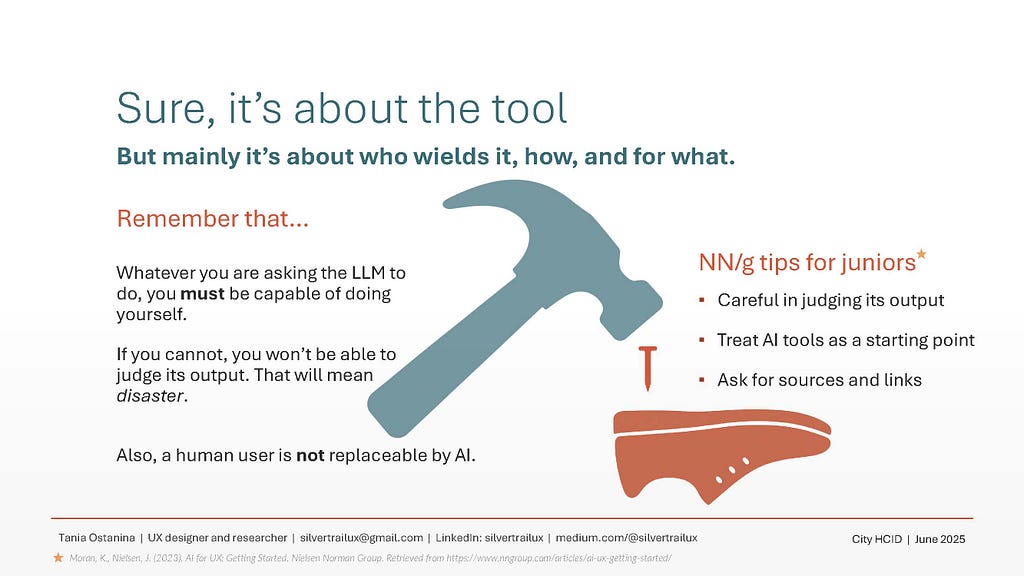

You have a hammer, but not everything you see is a nail. From cheating on learning assignments (honestly, HCID/UX students reading this, if you have tried AI generating user interview transcripts — please just stop) to an unfortunate music fan provoking the wrath of Nick Cave by sending him a ChatGPT song “in the style of Nick Cave”, there are many instances where using AI is not a good idea.

The media loves to sensationalise such stories and over time this contributes to a layered ‘feeling’ in the zeitgeist that somehow all AI is useless or harmful, no matter its application.

I disagree.

For we can hit some nails with this hammer. GenAI’s limitations and capabilities are a good match for the limitations and requirements of certain types of UX research. Specifically:

- GenAI excels at summarising text data → UX research creates a lot of text data.

- In GenAI, hallucinations are a given → conventional UX research can usually cope with a certain amount of ‘fuzziness’. (But if your work lies in highly precise fields, banning the use of AI would be sensible).

- GenAI, by its nature, can only create derivate output → UXers rarely create output that is entirely original. Instead, our strength lies in adaptation of what already exists.

And that, ladies, gents and folks, is why UXers love AI. It’s a good match and a genuinely useful tool for us. There is no controversy in the industry about using it*, bar the (now largely resolved) data privacy limitations. No-one will care if your research report has been AI-generated, as long as the report is well written and provides the data needed.

* However, there should absolutely be more controversy about the ethics of AI. I for one am puzzled about how little the UX profession is taking about it, and will continue to stir this by highlighting the past and current ethical failings of the AI technology.

The meat of it

Anyway, onto the recipe.

I will give you a real world example of a UX study where I have used AI to analyse user data for a recent project. All identifying information is anonymised.

I am using ChatGPT Enterprise — the private ‘walled off’ version with the latest Deep Research functionality. This is a specialised AI ‘agent’ launched by OpenAI in February 2025 to a tall claim of being “perfect for people who do intense knowledge work”.

If you don’t have access to Enterprise, you can get your hands on Deep Research via a $20 ChatGPT Plus subscription (albeit, as far as I’m aware, your data won’t be ‘walled off’).

My 2025 method starts in the same way as my previous one, with the three steps:

- Feed: give it the stuff. The variety of ‘stuff’ that you can feed it is now vastly expanded: gigantic datasets, JSON files, screenshots, web links, PDF files, whatever have you. ChatGPT Enterprise has become particularly adept at reading PNGs and can easily understand screenshotted text.

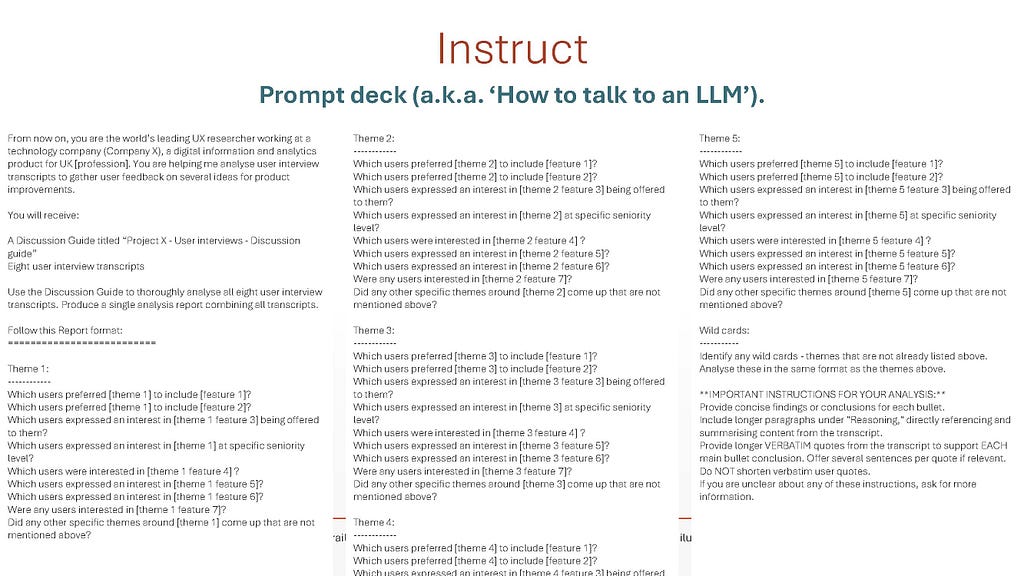

- Instruct: tell it what to do. This still follows the Prompt Deck rules I’ve outlined in my original article.

- Harvest: get the required information from it and use it as needed, for example, for creating reports for your organisation’s senior leadership.

Step 1: Feed

The beauty of Deep Research is that it can cope with very large amounts of data and has a higher accuracy than most conventional models such as 4o, Claude 3.7 Sonnet etc. As I’ve discovered, while you can feed it documents such as interview transcripts and screenshots of your prototype, you can also tell it to anchor to one particular document and let that document dictate the direction of the model’s thinking and analysis.

In my case study, this document is the Discussion Guide / Research Plan.

The Discussion Guide, often created by UXers collaboratively with product managers, typically contains:

- Research context: What is the research project? What product is it for? Why is it being conducted?

- Research objectives and hypotheses: What are you trying to prove or disprove with this research?

- Information on interview participants/users: Who are they? What was the recruitment criteria? If (as in my case) they are professionals in a specific narrow field, what is it?

- Interview structure, including interview themes and types of questions asked, all linking to the research objectives and hypotheses.

Step 2: Instruct

So, you fed the model your interview transcripts, screenshots and the Discussion Guide. How do you tell the model what to do with it all?

No more lengthy chats

One crucial difference between Deep Research and conventional models is that Deep Research eliminates the need for extensive toing-and-froing. Instead, you have one go at it and after a few clarifying questions the model will commence its ‘task’, spitting out a single, highly detailed report based on your instructions. You only get to prompt once, so you’d better ensure your prompt cuts the mustard.

My prompting method remains largely the same as in my 2024 article: using the handy Prompt Deck to construct the prompt. I won’t cover every prompt technique again here but you can refer to that article for full details.

However, the weight of some prompt ‘cards’ versus others has shifted. These are now the most useful ‘cards’ by far:

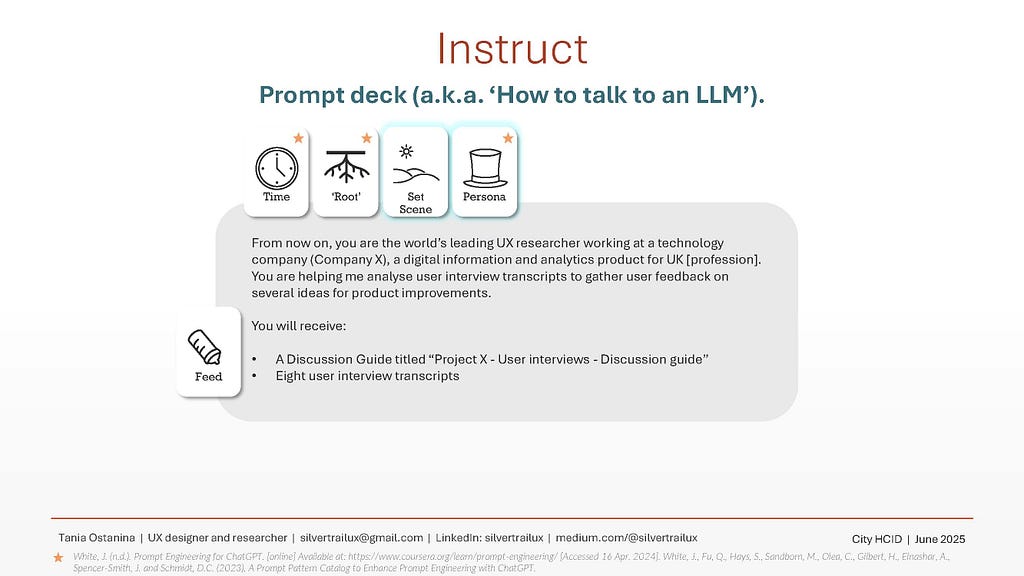

Prompt card: Set Scene

Explain the research purpose, what company and product it’s for, who were the users, what documents will the model receive; anchor onto your key document, if you have one (in this case, the Discussion Guide).

Prompt card: Persona

Describe who you want the model to act as, e.g. “The world’s leading UX researcher working at a technology company (Company X), a digital information and analytics product for UK [profession].”

Prompt card: Template

Telling AI how to structure its output remains one of the key techniques. You can tell it to follow the structure of the Discussion Guide (if relevant) or give it an example template with all the headings.

Prompt card: Chain of Thought

This incredibly useful technique is widely utilised by AI prompt engineers today. Simply, if you ask AI to explain why it has come up with a particular conclusion, the accuracy of its conclusion increases.

Prompt card: Wild Card (new)

A colleague came up with this one. In many user studies at my company, including this example, we already have a preset structure of what we want to find out from users. But what if the users said something we didn’t expect? If you don’t ask the model to find unexpected things, it won’t**. You should look for these anomalies yourself, but why not also get the model to think about them?

** I’m reminded of the hilariously creepy OpenAI video of two visual/voice AI assistants talking to a human presenter while another person walks behind him to show bunny ears. Both assistants ignore the bunny ears until explicitly prompted.

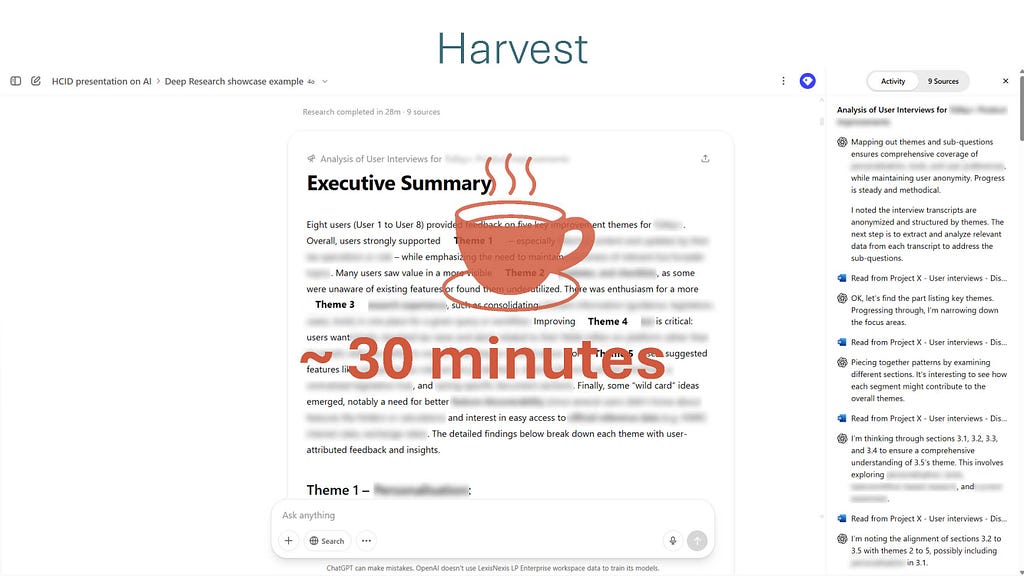

Step 3: Harvest

After you put in your prompt and documents, select ‘Deep Research’ and press the ‘submit’ button, get yourself a cup of coffee. Producing the requested report could take the AI up to an hour, depending on ‘task’ complexity. In my case study, this takes ~30 minutes.

Nice, you’ve got your report and it’s 50 pages long! Here’s the least entertaining part of the whole process:

!CHECK! EVERY! WORD!!!

While Deep Research’s accuracy is higher than that of most models, it’s still nowhere near 100% and its hallucinations can look particularly convincing. So, don’t trust it. AI to this day remains an eager but inexperienced assistant whose work must be checked. Only you, the human in the loop, have the expertise and the interpretative power to understand what really happened in the user interviews, to veto AI’s incorrect conclusions and to spot any ‘bunny ears’ that despite your ‘Wild Card’ prompting, the AI may have missed.

A neat feature of Deep Research is the right-hand panel where you can see what steps it took to arrive at its conclusions. The weirdest hallucinations can occur here and are great for a laugh. I’ve noticed that these types of hallucinations rarely make it to the final report, due to the ‘Chain of Thought’ process already baked into the Deep Research functionality.

Important tip: because Deep Research only allows one task at a time rather than a to-and-fro conversation, you won’t be able to make corrections while conversing with the AI. Do it the old-fashioned way by downloading the report into Word.

After making the corrections, you can upload the Word report back into ChatGPT and get it to condense the 50-pager into a succinct PowerPoint-style presentation. That’s the easiest step ever: just use the ‘Template’ prompt technique with a conventional AI model (4o, o3 etc.).

A note on user quotes

Conventional AI models, in my experience, are shit at pulling out verbatim user quotes from interview transcripts, instead opting to paraphrase them. Trust me, I tried everything! Deep Research’s citations, in contrast, reference the source material directly, so user quotes can be cross-checked much more easily. I’d still advise to manually check them, especially those that you will be using in your final reporting.

Deep Research for UX: the lowdown

So, Deep Research has spent ~30 minutes compiling its analysis. Even with the human time allocated to prompt writing, document preparation, checks and corrections, this is faster than doing the entire analysis manually — and definitely faster than my previous method that relied on conventional AI models.

A word of caution: it can be tempting to outsource the analysis to Deep Research entirely and skip the checking step. That would be a big mistake. Ultimately, it is you, and not AI, whose neck is on the line if Deep Research gets things wrong. Even as the models get more and more advanced, it remains ever-important to stay vigilant and interfere where they get things wrong.

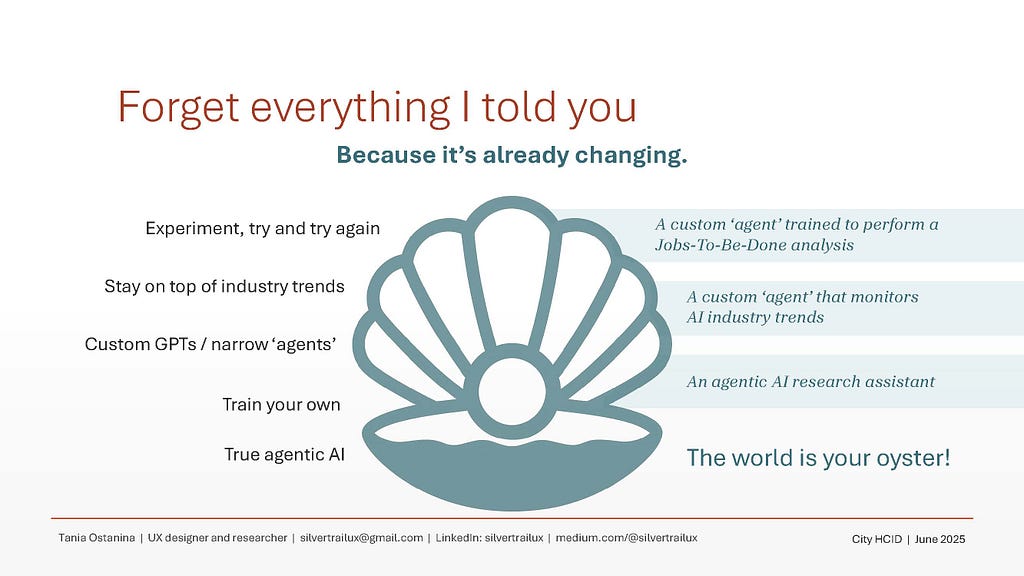

Forget everything I told you

In my HCID conference talk, this was my favourite slide. There’s a certain pleasurable perversity in telling the listeners a bunch of useful, actionable information and then telling them to throw it in the bin.

But that’s the reality of the AI landscape. Things change on a weekly basis and the only way to keep up is to keep evolving with it. For example, my talk didn’t yet mention agentic AI, but at the time of writing this it is the biggest (and also the fuzziest!) AI event of 2025. Chances are, if and when I write AI for UX v3.0, I’ll be talking about agentic AI.

Or maybe not! Who knows!

How I use generative AI for research in 2025 was originally published in UX Collective on Medium, where people are continuing the conversation by highlighting and responding to this story.